The Essential Guide to Evidence-Based Practice

(Evidence-based practice - Updated May 2023)

Your indispensable guide to evidence-based practice (EBP)

Evidence-based practice is becoming a real force in a rapidly growing number of organisations and public services. Even traditional organisations like the police have a growing body of evidence-based practitioners and functions to support the promotion and embedding of evidence-based practice as well as the development of skills and expertise and allocating resources to EBP. This guide will take you through all the essentials of evidence-based practice and point you in the direction of additional resources, sources and show you the relevant research evidence.

NB. Please note this is a living, continually updated document. As a result it changes and is added to frequently as new research and practice-based evidence become available. If there is anything missing or needs correcting please do let us know.

What is Evidence-Based Practice?

Essentially evidence-based practice is about improving our decision-making by using clear, well-researched and evidenced justifications for why we do things in certain ways, with the ultimate goal of delivering continual improvements/innovations, learning and excellence in our organisation or business. In short, it is about developing and fostering best practice and thinking by looking at and critically considering the real evidence and data about an issue rather than just using personal subjective opinions or gut feel.

Probably the most avid users of EBP are the health care and aviation industries. Evidence-based practice has revolutionised the way health care is undertaken in many countries around the world and has improved health care outcomes and impact for patients as a direct result.

In the aviation industry evidence-based practice has dramatically increased safety, reduced costs and increased operational effectiveness across the board in what is one of the most heavily regulated industries on the planet.

We will be looking at examples from both of these industries use of evidence-based practice throughout this guide.

Increasingly, clinicians, engineers and pilots in health care and aviation are basing most of their operational work on EBP these days, placing an ever increasing emphasis on using and creating good evidence as a basis for their work and decisions. As a result clinical expertise has increased alongside better patient outcomes and in the aviation industries better safety, working practices, use of materials and construction methods have delivered greater efficiencies, profitability and productivity as well as better customer outcomes.

Definition of Evidence Based Practice

One of the more widely-accepted definitions of evidence-based practice is provided by Dr. David Sackett (who was an American-Canadian medical doctor and a pioneer in evidence-based medicine who died in 2015) defines it as “the conscientious, explicit and judicious use of current best evidence in making decisions about the care of the individual patient. It means integrating individual clinical expertise with the best available external clinical evidence from systematic research.”1

Derivatives of this definition have extensively been used and acknowledged in many contexts from medicine, policing, the military, construction, engineering, to housing and social care.

In essence organisational evidence-based practice (EBP) refers to the systematic process where-by decisions are made and actions or activities are undertaken using the best evidence available. The aim of evidence-based practice is to remove as far as possible, subjective opinion, unfounded beliefs, or bias from decisions and actions in organisations in order to achieve to goals of the organisation.

The four sources of evidence for evidence-based practice

There are four sources of evidence for EBP:

- Research evidence - preferably peer reviewed research / scientific literature

- Work-based research (trial and error testing)

- Feedback from the organisation and customers / clients / stakeholders

- Practitioner experience and expertise (See The role of experience in evidence-based practice)

The point of this is that evidence-based practice is based on evidence from across all four sources, in order to inform decisions and not just one source. The idea is to have the 'best evidence' available to inform practice. The term best evidence refers to evidence from a number of sources including research or scientific studies. The evidence from these sources don't make the decision, rather they inform the decision and help guide the best course of action for the practitioner who used their experience and skills along side the thinking and experience of other practitioners, data from the organisation, academic research and feedback from stakeholders, clients and customers. In short evidence-based practice is founded on multiple sources of evidence and data (They are not the same thing - see this article - What's the difference between evidence and data)

Knowledge management and evidence-based practice

Evidence-based practice is essentially a form of knowledge management13. Knowledge management refers to " the systematic management of an organization's knowledge assets for the purpose of creating value and meeting tactical & strategic requirements; it consists of the initiatives, processes, strategies, and systems that sustain and enhance the storage, assessment, sharing, refinement, and creation of knowledge." 14

Types or forms of knowledge

There are considered to be two types of knowledge that inform decision-making in organisations:

- Explicit

- Tacit

Explicit knowledge refers to the codified, formal information that can be easily shared such as this guide, instructions, book contents etc Tacit knowledge on the other hand refers to the type of information that is difficult to transfer to others such as our subjective and personal intuitive understanding about how we sense or feel things, or how we know how to do something. They are often referred to know-what (explicit knowledge) and know-how (tacit knowledge).

The difference between evidence-based practice and EST's

In 2006 an American Presidential Taskforce on Evidence-Based Practice 2 made an interesting distinction between EBP and what are known as 'empirically supported treatments' or ESTs. A treatment in this context refers to any solution being proposed to deal with a problem. In essence, an empirically supported treatment is an evaluation of the evidence of what effect or impact a particular solution to an issue is likely to have in a given situation. Whilst empirically supported treatments may form part of the broader application of evidence-based practice, it is not on it's own sufficient to be considered to be EBP. Empirically supported treatments start with the the treatment and asks whether it will work in the situation at hand. Evidence-based practice on the other hand starts with the customer, client or patient and asks what research evidence will assist in achieving the best outcome for them. The goal with EBP isn't just to solve a particular problem but to find out what we can learn to obtain the best outcome for the enduser.

In terms of a definition of evidence-based practice this helps to clarify the broader scope of the general and habitual practice of using best evidence to find the best outcome rather than simply evaluating the potential effectiveness of a particular solution.

99% of everything you are trying to do...

...has already been done by someone else, somewhere - and meticulously researched.

Get the latest research briefings, infographics and more from The Oxford Review - Free.

Decisions based on best evidence

Evidence-based practice requires that operational decisions in the organisation are based on the best available, current, valid and reliable evidence. Now I emphasis the word 'evidence' as there is a critical distinction between evidence and data which people often confuse...

The difference between evidence and data: – See this article about the difference between evidence and data

Opinion and Evidence based decisions

In order to achieve evidence-based practice, we have to be informed by a more objective evidence base. The traditional approach for leaders, managers, practitioners, coaches and consultants has been to make decisions on a more subjective basis, using:

- personal experience, (see this article about the role of experience in evidence-based practice)

- traditional practices,

- professional judgement,

- convention,

- habit

- Personal insight and deduction.

These approaches however can frequently lead to misguided and biased decisions, yet they are frequently still regarded as the best method by many individuals and organisations.

The devastating impact of opinion-based decisions - See this article about the devastating effects of opinion based decision-making.

The application of evidence-based practice does not mean that experience and judgment are no longer applicable. On the contrary, they remain vital, but only where they are used in conjunction with evidence to consider the facts about what approach to take or decision to make, to reduce the likelihood of bias, habit or cultural preference swaying the results.

The aim with the application of EBP and personal judgement is to apply them in a critical manner, avoiding or at least being aware of the assumptions being made.

The point here is that when thinking about or tackling a problem or intervention, it is important that our actions take account of what has been learned over time by previous practitioners and researchers, preferably using the evidence from high-quality research studies.4

Focus on the practical

Evidence-based practice is meant to focus on the practical to help people understand what evidence there is, or is not, for their work practices, polices, procedures and decisions. Just because something appears to work doesn’t mean it does to the extent people believe it does and there may be unseen side effects or consequences that a more fully explored and evidenced approach may well discover.

In medicine, EBP is referred to as a process that allows physicians and clinicians to assess research and guidelines on clinical procedures from highly trusted findings and then apply them in their daily practice.

So placed into the context of organisational evidence-based practice this means that EBP is a set of processes that allows practitioners (employees), managers and leaders to assess research, guidelines, policies, procedures, working practices and decisions from trusted research findings and apply them in their daily practice, with an emphasis ondaily practice.

This focus on the practical is really important. It is vital that individuals and groups in the organisation are motivated to implement changes and that they regard the introduction of EBP as useful, valuable and important. If many individuals feel that the existing way of doing things is still the best approach, then there may be resistance to adopt and use EBP. In short, a change of culture may have to take place in the organisation to facilitate EBP in practice, but more of this in the implementation section of this guide.

However, to convince individuals, there has to be reliable, rigorous and robust evidence that the interventions have worked in similar contexts and will be applicable to the given organisation. If it can be clearly shown that EBP will lead to more efficiency, profit, better working practices and outcomes attitudes can change quickly and lead to a positive, favourable consensus to engage with evidence-based practice. Overall, as long as the focus remains on operational practice rather than turning employees and managers into academic researchers, it is significantly more likely that people in organisations will be able to discern its value and therefore accept and engage with EBP.5

The Principles of Evidence-Based Practice

Evidence-based practice is based on two principles:

- Understanding that scientific evidence alone is insufficient to guide decision-making, and that

- Within all the available sources of evidence, hierarchies exists - some types of evidence are seen as more valid or carry more weight than others (see the section below: Hierarchy of Evidence)6.

The basic 5 stages or steps of evidence-based practice - what's involved?

Over the past 20 years of so, the research into the foundational principals of EBP has developed into a coherent framework of steps or stages for EBP.7 They are as follows:

- First of all, EBP requires an assessment of the given situation and formulation of the basic question or questions (hypotheses) that need to be answered in order to address the problem.

- Secondly, the evidence should be acquired through a systematic search of online resources, books and, in many cases, specialist journals/databases in the field. There is a convention as to what is considered to be the best evidence - see the next section of this guide.

- An assessment and appraisal of the materials/evidence needs to take place to consider the applicability and validity of the given evidence about what type of action or intervention to pursue and to inform a final decision.

- The next stage involves integrating the new knowledge into the organisation and applying it in practice.

- Finally, an evaluation of performance takes place through mechanisms, such as feedback from relevant stakeholders.

The 5 A's of Evidence-based practice

These 5 steps are also known as the 5 A's

- Asking a critical question

- Acquiring the evidence

- Appraising and assessing the evidence

- Applying the best evidence to make an action plan and take action

- Assessing the outcomes of the action taken

Hierarchy or Levels of Evidence

Not all evidence is created equal and tends to be graded a number of factors:

- Generalisability - how applicable are the findings to a wide range of scenarios or do these finding only really relate to a very specific set of circumstances or conditions?

- Reliability - how stable and consistent the results of a study are over time? If we do the study again are we going to get the same results?

- Replicability - can the study be repeated and retested? If not how can we make any claims of reliability?

- Validity - do the methods and design of the study actually measure or describe what the study is purporting to show? In terms of validity there are two forms:

- Internal validity - do the methods and instruments used actually measure or describe what we think they are or are they measuring or describing something else? Examples of factors that can change the validity of a study include:

- Subject variability - you have a particularly un-representative subject pool. They are all university students and don't represent the general population for example.

- Size of the subject population. Having a study based on looking at 5 people is quite different and will effect the validity of a study differently from a study of thousands of people.

- Time - was the study conducted once in a short space of time or over a longer period of time.

- External validity - This refers to whether the study in question is applicable to the situation you want to apply it to. A results of a study conducted on pre-school children might not have much external validity when applied to a group of combat soldiers for example.

- Internal validity - do the methods and instruments used actually measure or describe what we think they are or are they measuring or describing something else? Examples of factors that can change the validity of a study include:

- Authority - has the study come from a well known and respected academic/university or a consultancy selling a product or service for example?

- Peer-reviewed - has the study or paper been through a process of checking and critique by fellow researchers or is it self published?

Evidential sources

In general terms there are two forms of sources of evidence:

- Primary sources - this refers to data that has come direct from the source in question. For example eye witness accounts, legal or historical documents, raw data, the direct results of experiments or research.

- Secondary sources which include second party analysis, summaries, evaluations, opinions, comments, articles etc that do not have first hand experience or knowledge of the issue or event.

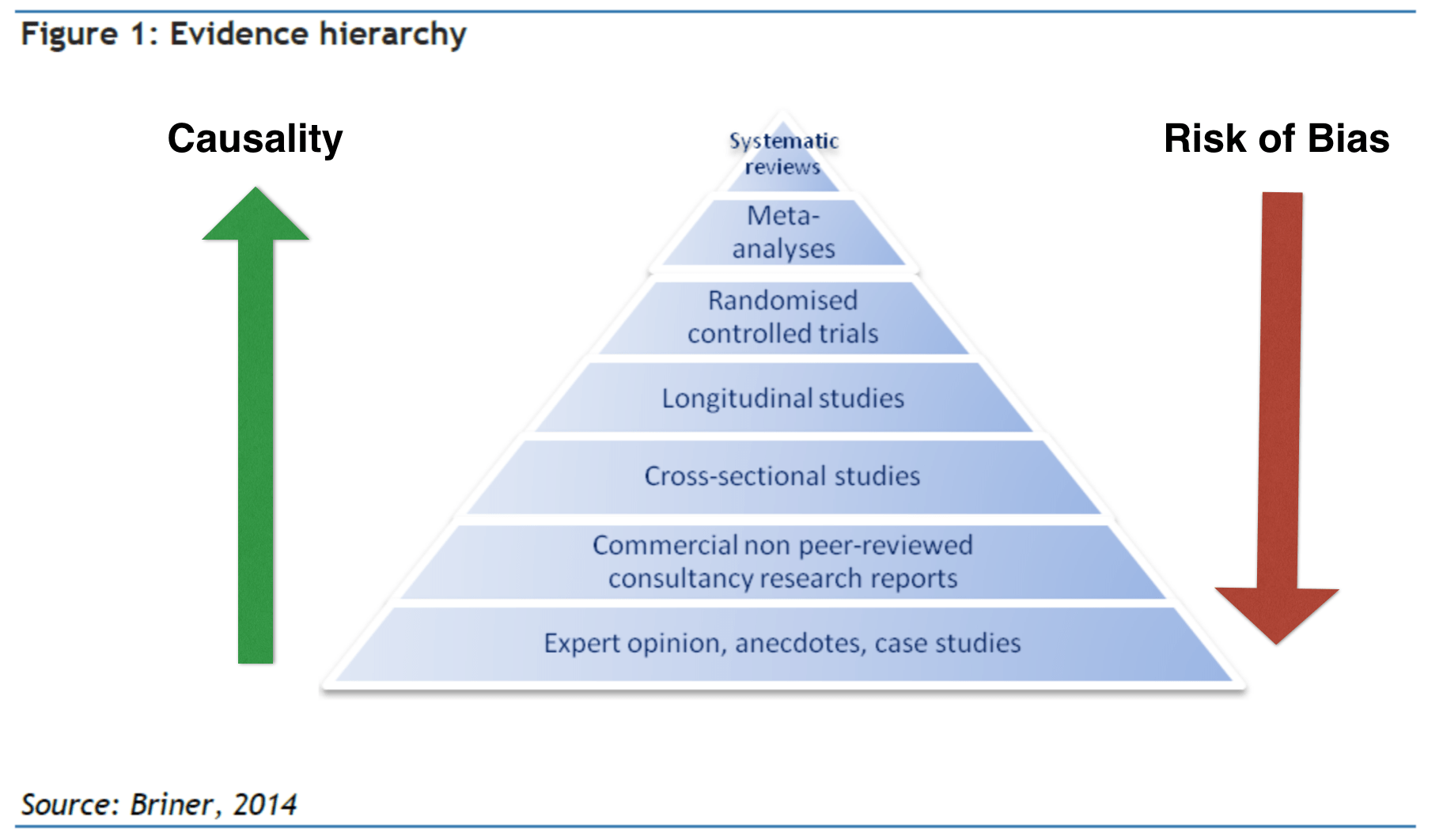

Hierarchy of research

In terms of confidence about the findings all research sits in a hierarchy:

As we go up the pyramid there is more likelihood of greater levels of reliability, validity, generalisability and confidence and as we go down there is an increased risk of bias, and potentially lower levels of reliability, validity, generalisability and confidence. This however does not mean that case studies have no usefulness. It just means that they are situated in a particular context that may or may not apply to your situation.

The evidence-based practitioner needs to be able to understand and evaluate the nature of the evidence they are dealing in, and with reference to the context within which they are going to use the evidence, before using it.

The History of Evidence-Based Practice

The Ancient Greeks - Pedanius Dioscorides and Galen of PergamonWhilst the history of Evidence Based Practice can actually be traced back the ancient Greeks, in particular Pedanius Dioscorides (AD 40-90) was a Greek physician, pharmacologist, botanist, and author of De Materia Medica a 5-volume Greek encyclopedia about herbal medicine and related medicinal substances (known as a pharmacopeia), that was widely read for more than 1,500 years. He was later employed as a medic in the Roman army.

Dioscorides

Later Galen of Pergamon (AD 129-216) who also served the Romans the most accomplished of all medical researchers of antiquity, Galen influenced the development of various scientific disciplines, including anatomy, physiology, pathology, pharmacology, and neurology, as well as philosophy and logic.

Archie Cochrane and modern day evidence-based practice. However modern day connections of evidence-based practice really stem from the work of Archie Cochrane a Scottish doctor noted for his book Effectiveness and Efficiency: Random Reflections on Health Services.6

Archie Cochrane

This book argued for a more systematic and rigorous approach to medicine and championed the use of randomised control trials to make medicine more effective, efficient and reliable. This campaign for the use of randomised controlled trials eventually led to the development of the Cochrane Library database of systematic reviews (more about these below), the establishment of the UK Cochrane Centre in Oxford (http://uk.cochrane.org) and the international Cochrane Collaboration, a collection of 14 evidence-based medicinal research centres. The tag line of the Cochrane centres is "Trusted evidence. Informed decisions. Better health." 7 which sums its mission up nicely.

Cochrane is known as one of the fathers of modern clinical Evidence-Based Medicine and is considered to be the originator of the idea of Evidence-Based Medicine.

He was known for arguing that medical strategies should be thoroughly and rigorously tested before being used on human beings. This advocacy for the use of evidence before practice laid the foundation of evidence-based medicine. He is particularly known for emphasising the need for random studies to be carried in different places to guarantee the accuracy of the evidence and the reduction of bias the tendency of medical practitioners to push their favourite home grown theories about best practice.

This shift from the personal experience-based intuitive and deductive reasoning of individual medics into a systematic, scientific and rigorous process was really the foundation of all modern evidence-based practice.

This approach to the practice of medicine rapidly gained ground as the results and outcomes on health care became measurable apparent. In the early stages of evidence-based medicine, some practitioners railed against it, stating that it was too slow and negated the vast experience of many professional medics.

The Four Elements of Evidence-Based Practice

Recently however evidence-based medicine has become seen as a conflation or union of four elements:

- The best research evidence available

- The expertise and experience of the clinician (See this article about the role of experience in evidence-based practice)

- The patient's or customers values and preferences

- As well as the environment or background existing at the moment.

This last element is important, as practicing medicine in a well-staffed well resourced hospital in the west is often entirely different to doing so in the middle of a battlefield in a developing country where resources are few and far between. This idea of evidence-based medicine really came out of the work and efforts of Dr. David Sackett who argued in 1996 that evidence-based practice is not solely based on research 8 but that also embraces medical expertise too. He further explained that patients’ needs and preferences could be taken into account by the evidence-based practice and this is what revolutionized evidence-based practice.

The model of evidence-based practice

This model of practice was been embraced in several sectors of medicine such as nursing, surgery, dentistry as well as aviation and organisational evidence-based practice and looked like this:

The latest Transdisciplinary Model

As a result of more recent research (9) however the model has now become a transdisciplinary model based on the recognition that most problem solving is done with reference to other disciplines and areas of expertise and the fact that decisions frequently span a wider arc than purely in the centre of the three areas of influence. The accepted model now looks more like this:

The Transdisciplinary Model model displays the components of EBP and how they can be applied to larger organizational levels such as in medical facilities and any organisation. The intention here is to show that evidence-based decision-making spans a range of sources and is not purely based on academic research and incorporates more operational and experiential elements of expertise.

The Iowa Model of Evidence-Based Practice

In 2017 researchers and nursing EBP practitioners based at Iowa University tested and published the Iowa Model of Evidence-Based Practice (16). This is a decision making matrix with the aim of helping practitioners navigate the evidence-based practice process:

- Identify the triggering issues or opportunities

- State the question or purpose

- Is this topic a priority? - If not do not proceed

- If yes - Form a team

- Collate, analyse and synthesise the body of published evidence

- Conduct systematic research /search

- Weigh quality, consistency and quantity of studies with the risk

- Is the sufficient evidence to act? - if not conduct research to provide the evidence

- If there is sufficient evidence - Design and pilot changes

- Is the change robust enough and appropriate for adoption of the changes into routine practice? - If not consider alternatives and redesign action and test

- If yes, integrate and embed into practice

- Disseminate results and enter process and results into the organisational knowledge management system

Problems faced with implementing evidence-based practice

A number of studies (10) show that whilst many specialists look upon the idea of EBP in a favourable way, there are barriers in understanding how to translate evidence and apply it to practice. Many different fields, from business to sport, need good research to be properly disseminated and implemented in the organisation if it is going to lead to real, practical improvements. However a series of problems do cause barriers to implementing evidence-based practice. The four major barriers to successful implementation have been found to be:

Access to resources has been cited as a primary problem for many individuals and organisations. Access to professional resources required to develop EBP is often quite difficult and time consuming. In some cases this just means the use of textbooks and Web sites. However, some areas of practice may require access to electronic online journals or databases with specialist research and this is often limited. In some cases, the specialist knowledge required for the organisation may require an investment into resource access and the skills to translate the sources into useful material. Nonetheless, improving knowledge of how to use freely available online resources may be sufficient to allow for the rapid development of EBP.11

Time constraints are regularly cited in every profession as being a key barrier to the application of EBP. For instance, in education, research indicates that teachers and instructors suggest that they are generally pushed to the limit and that the time needed to do research and introduce best practice is difficult to find. This is often because, in an ever-demanding workplace, specialists in most fields find that they often have to “wear multiple hats”, leading to legitimate concerns about time availability. 12 A number of studies have found that time constraints to source, obtain and translate research studies into something that is practical is the number one barrier to implementing and maintaining evidence-based practice across all professions.

Another key issue is a lack of support at organisational and managerial levels to give people the time to engage with the research needed to implement EBP properly. This can be overcome through the introduction of reliable administrative support in the organisation and a careful consideration of priorities when the application of EBP is likely to bring substantial transformative benefits or by using specialist research translators and providers like The Oxford Review.

At the bottom of all of the barriers to implementation of evidence-based practice is just a simple lack of knowledge and understanding. Individuals and organisations are often completely unaware of the new developments in their field, or the scientific evidence available to them that could completely revolutionise their organisation, outcomes and thinking. In addition, many specialists lack the basic skills required to assess the value and applicability of research, as well as its trustworthiness. Educational workshops and training within an organisation can be vital to help understand and integrate new practices in to everyday operations. Employees may benefit from training sessions led by external experts. The building of knowledge and understanding of how to use EBP can give specialists the confidence to go beyond a sole reliance on poor-quality subjective approaches.12

A 2019 study (17) found that new practitioners tend not to transfer their learning of evidence-based practice into the workplace for a number of specific reasons:

There tends to be an essential disconnection in practitioners minds between evidence and research. This backs up another 2017 study which found that practitioners often only have a shallow understanding of evidence and fail to understand the importance of systematic and valid research evidence to inform practitioner experience and the immediate evidence of the situation.

Additionally the study found that:

1.Practitioners know that they should be using research evidence as part of the evidence-based mix but recognise they aren’t.

2.This is appears, is because they move form a culture of research centrality (at university) into a culture of practice, immediacy and reduced time.

3.From a student’s and practitioner’s point of view, research is someone else’s job.

4.Practitioners and particularly new practitioners who usually work within a hierarchy and usually at the bottom levels of the hierarchy feel that their voice, opinion and knowledge of the research doesn’t carry much weight.

5.In the intensity of daily work and practical concerns, knowledge production and study tend to get seen as situated in the college or university and not the work environment.

However, the study found that the culture of the workplace appeared to be a prime indicator of whether or not new practitioners engage with evidence-based practice.

One of the biggest issues and barriers is how evidence-based practice is promoted and people are introduced to it.

See this article about Why evidence-based practice probably isn’t worth it…

Developing evidence-based practice in organisations

There are a number of key issues that need to be addressed when you are trying to get evidence-based practice going in an organisation:

- Developing the skills, attitudes, behaviours and knowledge required of evidence-based:

- Practitioners

- Supervisors

- Mentors/trainers/educators

- Managers, and

- Leaders

- Creating, developing and maintaining the systems and procedures needed

- Obtaining, creating and developing the resources needed

Developing the skills, attitudes, behaviours and knowledge required of evidence-based

A systematic review of post-graduate development of medical evidence-based practitioners(11) found that whilst standalone classroom-based teaching improved knowledge it does not develop but not skills, attitudes, or behaviour. However the research shows that clinically integrated teaching (actually using and being mentored / coached in the context the practice is going to be used) improved knowledge, skills, attitudes, and behaviour.

The skills required of evidence based practitioners

There are a series of six distinct skill sets EBP practitioners need such as: "

- Asking: translating a practical issue or problem into an answerable question

- Acquiring: systematically searching for and retrieving the evidence

- Appraising: critically judging the trustworthiness and relevance of the evidence

- Aggregating: weighing and pulling together the evidence

- Applying: incorporating the evidence into the decision-making process

- Assessing: evaluating the outcome of the decision taken

to increase the likelihood of a favorable outcome." 3.

Evidence-based practice competencies

A 2014 study was able to discern that there are 24 evidence-based practice competencies that contribute to a proficient practitioner. 13 are basic level competencies and the remaining 11 are advanced level competencies:

- Questions operational practices for the purpose of improving the quality operations.

- Describes problems using internal evidence (internal evidence* = evidence generated internally using internal assessment data, outcomes management, and quality improvement data).

- Participates in the formulation of critical questions using PICOT* format (*PICOT = Population; Intervention or area of interest; Comparison intervention or group; Outcome; Time).

- Searches for external evidence to answer focused questions (external evidence = evidence generated from research).

- Participates in critical appraisal of pre-appraised evidence (such as practice guidelines, evidence‐based policies and procedures, and evidence syntheses).

- Participates in the critical appraisal of published research studies to determine their strength and applicability to operational practice.

- Participates in the evaluation and synthesis of a body of evidence gathered to determine its strength and applicability to practice.

- Collects practice data (e.g. individual patient/customer data, quality improvement data) systematically as internal evidence for decision making in the care of individuals, groups, and populations.

- Integrates evidence gathered from external and internal sources in order to plan evidence‐based practice changes.

- Implements practice changes based on evidence and clinical expertise and patient preferences to improve care processes and customer outcomes.

- Evaluates outcomes of evidence‐based decisions and practice changes for individuals, groups and populations to determine best practices.

- Disseminates best practices supported by evidence to improve quality of service and customer outcomes.

- Participates in strategies to sustain an evidence‐based practice culture.

24 evidence-based practice competencies

- Systematically conducts an exhaustive search for external evidence to answer critical questions.

- Critically appraises relevant pre-appraised evidence (i.e. guidelines, summaries, synopses, syntheses of relevant external evidence) and primary studies, including evaluation and synthesis.

- Integrates a body of external evidence from research and related fields with internal evidence* in making decisions about patient care.

- Leads transdisciplinary teams in applying synthesised evidence to initiate clinical decisions and practice changes.

- Generates internal evidence through outcomes management and EBP implementation projects for the purpose of integrating best practices.

- Measures processes and outcomes of evidence‐based critical decisions.

- Formulates evidence‐based policies and procedures.

- Participates in the generation of external evidence with other professionals.

- Mentors others in evidence‐based decision making and the EBP process.

- Implements strategies to sustain an EBP culture.

- Communicates best evidence to individuals, groups, colleagues and policy makers.

Advanced evidence-based competencies

The role of experience in evidence-based practice

Evidence-based practice it is not solely focused on being dependent on peer-reviewed research. Experience plays a vital role in evidence-based practice, only it’s not any old experience that counts here. There is experience and experience. Understanding the role of experience in evidence based practice, and what types of experience that is matters.

See the rest of this article: The role of experience in evidence-based practice

Critical leadership behaviours

A 2020 study (25) looking at the how leadership and management behaviours can make the difference when getting evidence-based practice integrated into employees’ working life has found that there are three main conditions and three strategies that predict successful evidence-based practice implementation.

The study found that one of the biggest problems for leaders in promoting and supporting evidence-based practice, aside from a lack of knowledge and skills about using evidence-based practice, was creating space, time and room for engaging with the practice. The study found that leaders tend to focus on the day job and have little space or time for integrating or focusing on evidence-based practice and supporting its implementation.

The study also found that there are three main conditions that influence whether or not a leader is likely to make space/room for engaging with evidence-based practice supporting behaviours:

- The current organisational rules, procedures and policies can mean that leaders are otherwise engaged/distracted and time poor. In essence, this means that leaders are restricted by limited resources and usually lacking any form of system to instigate change and support evidence-based practice.

- Where organisational culture focuses on task achievement and standardising routines above change, this tends to be characterised within an organisation as the day-to-day practical work being seen as "real work" and anything else, like focusing on change or promoting evidence-based decision-making, as less important. Another symptom of this factor is where work planning focuses almost wholly on work tasks and does not allow room for other activities, such as evidence-based practice.

- High workloads and insufficient strategies for creating space for other activities, such as evidence-based practice and quality decision-making. This is usually identified through the fast pace of work.

The study identified three strategies that can help to create change and the space for evidence-based practice:

- Positioning for evidence-based practice. This usually means creating time and space for evidence-based practice outside of the usual workflows. Three tactics were identified that help with this:

- Leaders ensuring that they have the capacity, knowledge and skills for evidence-based practice promotion and support.

- Working with other leaders in small leadership teams to solve evidence-based practice promotion support problems.

- Lastly, adjusting their own workloads so that they have the space to promote and support evidence-based practice integration.

- Executing evidence-based practice. This means:

- Creating daily patterns of behaviour within the organisation that involve the four aspects of evidence-based practice:

- External research

- Employee knowledge/experience/wisdom

- Customer/client experience/knowledge/wisdom

- Evidence-based testing and innovation.

- Motivating employees by making evidence-based practice advantageous for them.

- Encouraging and supporting employees to manage their own time and create space for evidence-based practice.

- The provision of coaching and training for employees and managers.

- Acting as a buffer between workloads and the need for evidence-based practice and supporting staff by setting aside time and space for engagement in evidence-based practice.

- Creating daily patterns of behaviour within the organisation that involve the four aspects of evidence-based practice:

- Interpreting evidence-based practice responses. This involves a number of leadership and management activities including:

- Leaders and managers helping employees by finding good sources of useful research information in a format that employees can readily use and understand.

- Actively gathering feedback from employees about what is working and not working around evidence-based practice and then acting on it.

- Actively observing employees engaging with evidence-based practice and seeing what can be done to make things better.

- Watch and observe the consequences of evidence-based practice (positive, neutral and negative consequences) and working out how to capitalise on the positive and deal with the neutral or negative consequences.

- Recognising that there is often a conflict between standardised policy-driven working practices and innovative new practices that emerge from evidence-based practice and holding the space, so that new practices can be trialled and tested effectively.

So, how can you tell how good a piece of research is and what do we mean by good?

In evidence-based practice circles, the accuracy and reliability of the research is an important factor when deciding to include a study in the evidence-base for a decision, especially clinical or engineering decisions where people’s lives are on the line.

GRADE

One way that evidence-based practitioners judge the quality of research is to use the GRADE system or framework (18). GRADE stands for Grading of Recommendations, Assessment, Development and Evaluations, and is used extensively in medical scenarios.

GRADE is now being used in engineering, organisational evidence-based practice and is the basis of many systematic reviews.

The 4 levels of evidence

When choosing studies to include in a decision GRADE has four levels of evidence, or four levels of certainty, in the quality of the evidence/study:

- Very low – The real effect is probably very different from the reported findings.

- Low – The true effect is quite likely to be different from the findings of this study.

- Moderate – The true effect is probably close to the findings of this study

- High – A high level of confidence that, the findings represent the true effect / represents reality

The 5 GRADE Domains

There are five overall factors which are used to help a practitioner work out what the level of a particular study:

- Risk of bias. In 2005 The Cochrane Institute in Oxford produced a tool that has become the standard bias risk assessment tool – The Cochrane Collaboration’s Tool for Assessing Risk of Bias - and looks specifically at a range of different biases that can affect a study’s findings. The tool looks for whether the study being looked at:

- Uses quality scales. Quality scales are often inherently biased and based on opinions.

- Looks at the internal validity of the study. What this means is that the researchers extensively look for and report any potential biases the method or study might have. In other words, are the methods used in the study likely to lead to bias or less so?

- Has actively looked for sources of bias or influence in their results. Double blind trials, where neither the participants (subjects) nor the researchers know which subject is getting which treatment or is in which group are considered to be at the least risk of bias.

- That the assessor / evaluator understands the methods used and can make a good judgement about the methods used.

- Is there a risk of bias in the way the data is being presented or represented?

- Does the use or group to which you are putting the study introduce a risk of bias not associated to the study?

The Cochrane Collaboration’s Tool for Assessing Risk of Bias usually uses a 3-point grading system for judging bias:

- Low risk of bias

- High risk of bias

- Unclear risk of bias

2. Imprecision. How precise are the data and methods applied?

3. Inconsistency. Does the study show inconsistences openly or do they try to mask them? Are there inherent inconsistencies that haven’t been reported?

4. Indirectness. How close is the situation / population of the study being looked at to the one it is being applied to? Is this study talking directly into and about the organisation or population of the study, or is this being used in a more indirect way?

5. Publication bias. Does the publisher have a stake in the findings? For example, is this a study by a consultancy attempting to show how good its own methods are?

All of these potential biases downgrade the quality of the study.

Problems Getting Practitioners to use Evidence-Based Practice

So if evidence-based practice is so fantastic why aren't more organisations using it? There are a number of problems at the core of evidence based practice. Many of these problems, whilst being mostly perceptual in nature mean that practitioners and senior management in organisations are reluctant to engage with EBP.

For example A 2003 study of occupational therapists [26] found that whilst most practitioners (96% n=649) thought evidence-based practice was a good idea they tended only to refer to any form of research evidence about 42% of the time when making difficult decisions. (It was not stated how systematic the resorts to research evidence were) Over 96% of practitioners were found to prefer to rely on expertise and experience.

Part of the problem the study concluded is:

- A lack of time (Almost 92% of practitioners cited this as the reason for not engaging in EBP)

- A lack of skills (53.8% of practitioners)

- A lack of research evidence for this particular issue (67.9%)

- A lack of access to the research evidence (51.8%)

- Inability to understand or interpret the research evidence (47.8%)

And this is a class of practitioner whose profession is based in the health care professions where evidence-based practice is considered the norm and where most occupational therapists are university educated.

Additionally evidence-based practice is frequently perceived to be

- difficult,

- hard work,

- slow and

- unnatural, by which I mean not part of the normal decision making process.

However, evidence-based practice can place practitioners into a situation of paradox or conflict. How do you proceed when your experience, the science and the goals or wishes of the client are in conflict or telling us different things? Additionally it has been found [27] that digging around in the research evidence can frequently provide new knowledge or thinking that collides with and opposes the practitioners current knowledge and thinking.

Indeed it has been found that evidence-based practice can frequently be perceived as a threat to the practitioner [27]. A 2001 study [28] somewhat counter-intuitively, found that more experienced and senior practitioners and managers tend to perceive evidence-based practice as a threat to their autonomy, personal status and the status of the profession!

Issues with the Evidence-Based Practice Inquiry Model

Within the evidence-based framework there is a process known as the evidence-based practice inquiry process, which is intended to help practitioners navigate these four forms of evidence. The evidence-based practice inquiry process is a series of stages or steps:

- Ask a question or create a problem statement

- Acquire the evidence

- Evaluate the evidence

- Apply the results of the evaluation process

- Analyse and adapt the practice in the light of the feedback from the application at stage four.

A 2022 study(29) found that there are a number of problems with this:

The aim of the evidence-based practice inquiry process and the evidence-based practice is to get practitioners to take into account all of the forms of evidence and the wider environmental factors (resources, conditions, etc.) and organisational factors (culture, goals, etc.) when they are trying to diagnose and solve problems, or make decisions in a structured way. The intention of the structure is to increase reliability and reduce error.

The study found a series of weaknesses with or criticisms about the current evidence-based practice inquiry process:

1. Problem definition issues

2. Information overload

3. Decision-making intractability

4. Lack of focus on tasks

5. Ignoring theory and conceptual frameworks

6. The inquiry process and evidence-based model may not be appropriate

7. Lack of advice for dealing with conflicting evidence

8. Values not considered

1. Problem definition

The first issue with the model is that the practitioner needs to start with a well-defined problem statement or question. What if the practitioner doesn’t know what the problem is or what the main elements, structure and nature of the problem is? Additionally, what if the problem has been mis-diagnosed and, as is common, the practitioner is dealing with a symptom rather than the core or causal issue(s)?

2. Information overload

The next issue with the model is the distinct possibility of information overload. The model states that there needs to be a review and consideration of all available research. In many areas of research there are often hundreds, if not thousands, of possible studies examining the topic. Further, there is likely to be an expanding number of associated studies which can usefully inform the issue at hand. The question then becomes how can practitioners usefully find, categorise, index, summarise and extract the pertinent information from the often huge array of sources available?

3. Decision-making intractability

The core issue here is that, given the issues with problem definition and the likelihood of information overload, people often struggle to make coherent and informed decisions based on the best evidence.

4. Lack of focus on tasks and constraints

The existing process largely focuses on asking questions and finding and evaluating evidence to find solutions. The evidence-based practice inquiry process, however, has little to say about what tasks or processes are needed to arrive at a good or reasonable solution, i.e. what is the plan and is the plan to solve this issue achievable?

One of the issues here is highlighted by what is known as constraint-composition theory, which states that any problem formulation should also include an account of all of the constraints or impediments to a solution. For example, time, resource, financial, skills, knowledge and other constraints that exist in any context[i]. None of this is taken into account or explored by the process, leading to a somewhat idealistic and naive view of the evidence-based practice inquiry process.

5. Ignoring theory and conceptual frameworks

Given that there is no theory neutral approach to anything, one would think that an examination of the theoretical / schema (model of thinking) being used and their associated assumptions would be important, not least because such an examination can highlight biases and gaps in knowledge. However, the evidence-based practice inquiry process does not include this.

Research and the evidence it provides exist within theoretical and conceptual frameworks. These frameworks make assumptions and have a perspective. Understanding what those frameworks are is essential for understanding the nature of the evidence presented. For example:

6. Is the enquiry process and evidence-based model appropriate?

An example of this lack of consideration of the theoretical assumptions made whilst problem-solving is that the evidence-based practice model was originally designed to help medical consultants make good decisions about the best treatment for ill patients. It is a treatment/repair focused problem-solving model, which takes little or no account of causal issues. This has led to a model and method (the evidence-based practice inquiry process) designed to bring together the best sources to find the best treatment to heal, cure or reduce the impact of an illness or disease.

7. Dealing with conflicting evidence

There is almost no guidance what to do when the evidence gathered is in conflict and suggesting two opposite courses of action. What tends to occur in such situations is that some rule is applied to give priority to one set of evidence or course of action over the other. The question becomes which rule and why? Often preference is given to one set of solutions or forms of evidence based on a set of norms, but these then are often unquestioned and end up as a form of bias.

The evidence-based practice inquiry process makes no account of what to do when the evidence is conflicting, or a paradoxical situation occurs. There appears to be an assumption that evidence-based practice is a straightforward step-by-step process of finding, evaluating and applying evidence to a problem.

8. The issue of values

Organisational, cultural, sectoral and individual values often underpin our perceptions, what we see as a problem, its magnitude, what are and are not acceptable solutions, what is ethical and what isn’t, what is valuable knowledge and what isn’t and what is a good solution and what isn’t. Both the research, the evidence and the process of evidence-based inquiry are enabled and constrained by a wide set of values, values which are rarely examined or questioned.

Rather, people tend to follow the rules those values help to construct and maintain.

The question here is what are those values and how do they impact the evidence-based practice inquiry process and its outcomes?

References

- Sackett, D., Rosenberg, W., Gray, J., et al. (1996). Evidence based medicine: what it is and what it isn't: It's about integrating individual clinical expertise and the best external evidence. BMJ, 312, 71-72. Doi: http://dx.doi.org/10.1136/bmj.312.7023.71

- APA Presidential Task Force on Evidence-Based Practice. "Evidence-based practice in psychology." The American Psychologist 61.4 (2006): 271.

- Barends, E., Rousseau, D. M., & Briner, R. B. (2014). Evidence-based management: The basic principles. - https://www.cebma.org/wp-content/uploads/Evidence-Based-Practice-The-Basic-Principles-vs-Dec-2015.pdf

- A beginner's guide to evidence-based practice in health and social care professions (2nd ed.) Helen Aveyard (Milton Keynes: Open University Press, 2013). PACER center, Evidence-Based Practices at School: A Guide for Parents, http://www.readingrockets.org/article/evidence-based-practices-school-guide-parents)

- Gill Harvey, Alison Kitson,Implementing Evidence-Based Practice in Healthcare: A Facilitation Guide(London: Routledge, 2014), pp. 47-65.

- Murphy, M., MacCarthy, M. J., McAllister, L., & Gilbert, R. (2014). Application of the principles of evidence-based practice in decision making among senior management in Nova Scotia’s addiction services agencies.Substance abuse treatment, prevention, and policy,9(1), 47.

- Straus, S. E., Glasziou, P., Richardson, W. S., & Haynes, R. B. (2018). Evidence-Based Medicine E-Book: How to Practice and Teach EBM. Elsevier Health Sciences.

- Satterfield, J. M., Spring, B., Brownson, R. C., Mullen, E. J., Newhouse, R. P., Walker, B. B., & Whitlock, E. P. (2009). Toward a transdisciplinary model of evidence‐based practice.The Milbank Quarterly,87(2), 368-390.

- AnBussières, A. E., Al Zoubi, F., Stuber, K., French, S. D., Boruff, J., Corrigan, J., & Thomas, A. (2016). Evidence-based practice, research utilization, and knowledge translation in chiropractic: a scoping review.BMC complementary and alternative medicine,16(1), 216.

- W. McCarty, C., Hankemeier, D. A., Walter, J. M., Newton, E. J., & Van Lunen, B. L. (2013). Use of evidence-based practice among athletic training educators, clinicians, and students, part 2: attitudes, beliefs, accessibility, and barriers.Journal of athletic training,48(3), 405-415.

- Manspeaker, S., & Van Lunen, B. (2011). Overcoming barriers to implementation of evidence-based practice concepts in athletic training education: perceptions of select educators.

- Coomarasamy, Arri, and Khalid S. Khan. "What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review." Bmj329.7473 (2004): 1017.

- Hübner, Lena. "Reflections on knowledge management and evidence-based practice in the personal social services of Finland and Sweden." Nordic Social Work Research 6.2 (2016): 114-125.

- Knowledge Management Tools at https://www.knowledge-management-tools.net/knowledge-management-definition.html accessed 8th April 2018

- Melnyk, B. M., Gallagher‐Ford, L., Long, L. E., & Fineout‐Overholt, E. (2014). The establishment of evidence‐based practice competencies for practicing registered nurses and advanced practice nurses in real‐world clinical settings: Proficiencies to improve healthcare quality, reliability, patient outcomes, and costs. Worldviews on Evidence‐Based Nursing, 11(1), 5-15.

- Iowa Model Collaborative. (2017). Iowa model of evidence-based practice: Revisions and validation. Worldviews on Evidence-Based Nursing, 14(3), 175-182. doi:10.1111/wvn.12223

- Moore, F., & Tierney, S. (2019). What and how… but where does the why fit in? The disconnection between practice and research evidence from the perspective of UK nurses involved in a qualitative study. Nurse education in practice, 34, 90-96

- Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ (Clinical research ed). 2008;336(7650):924-6.

- Guyatt G, Oxman AD, Akl EA, Kunz R, Vist G, Brozek J, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. Journal of clinical epidemiology. 2011;64(4):383-94.

- Guyatt GH, Oxman AD, Kunz R, Atkins D, Brozek J, Vist G, et al. GRADE guidelines: 2. Framing the question and deciding on important outcomes. Journal of clinical epidemiology. 2011;64(4):395-400.

- Balshem H, Helfand M, Schunemann HJ, Oxman AD, Kunz R, Brozek J, et al. GRADE guidelines: 3. Rating the quality of evidence. Journal of clinical epidemiology. 2011;64(4):401-6.

- Guyatt, G. H., Oxman, A. D., Kunz, R., Vist, G. E., Falck-Ytter, Y., & Schünemann, H. J. (2008). What is “quality of evidence” and why is it important to clinicians?. Bmj, 336(7651), 995-998.

- BMJ Best practice series: What is GRADE? Accessed at: https://bestpractice.bmj.com/info/toolkit/learn-ebm/what-is-grade/ on 3rd November 2019

- Guyatt, G., Oxman, A. D., Akl, E. A., Kunz, R., Vist, G., Brozek, J., ... & Jaeschke, R. (2011). GRADE guidelines: 1. Introduction—GRADE evidence profiles and summary of findings tables. Journal of clinical epidemiology, 64(4), 383-394.

- Renolen, Å., Hjälmhult, E., Høye, S., Danbolt, L. J., & Kirkevold, M. (2020). Creating room for evidence‐based practice: Leader behavior in hospital wards. Research in nursing & health, 43(1), 90-102.

- Bennett, S., Tooth, L., McKenna, K., Rodger, S., Strong, J., Ziviani, J., Mickan, S., & Gibson, L. (2003). Perceptions of evidence-based practice: A survey of Australian occupational therapists. _Australian Occupational Therapy Journal_, _50_(1), 13–22

- Dubouloz, C.-J., Egan, M., Vallerand, J., & von Zweck, C. (1999). Occupational Therapists’ Perceptions of Evidence-Based Practice. _American Journal of Occupational Therapy_, _53_(5), 445–453.

- Wiles, R., & Barnard, S. (2001). Physiotherapists and Evidence Based Practice: An Opportunity or Threat to the Profession? _Sociological Research Online_, _6_(1), 62–74.

- Ward, T., Haig, B. D., & McDonald, M. (2022). Translating science into practice in clinical psychology: A reformulation of the evidence-based practice inquiry model. Theory & Psychology, 32(3), 401–422

Be impressively well informed

Get the very latest research intelligence briefings, video research briefings, infographics and more sent direct to you as they are published

Be the most impressively well-informed and up-to-date person around...