- in Blog by David Wilkinson

- |

- 1 comments

The role of experience in evidence-based practice

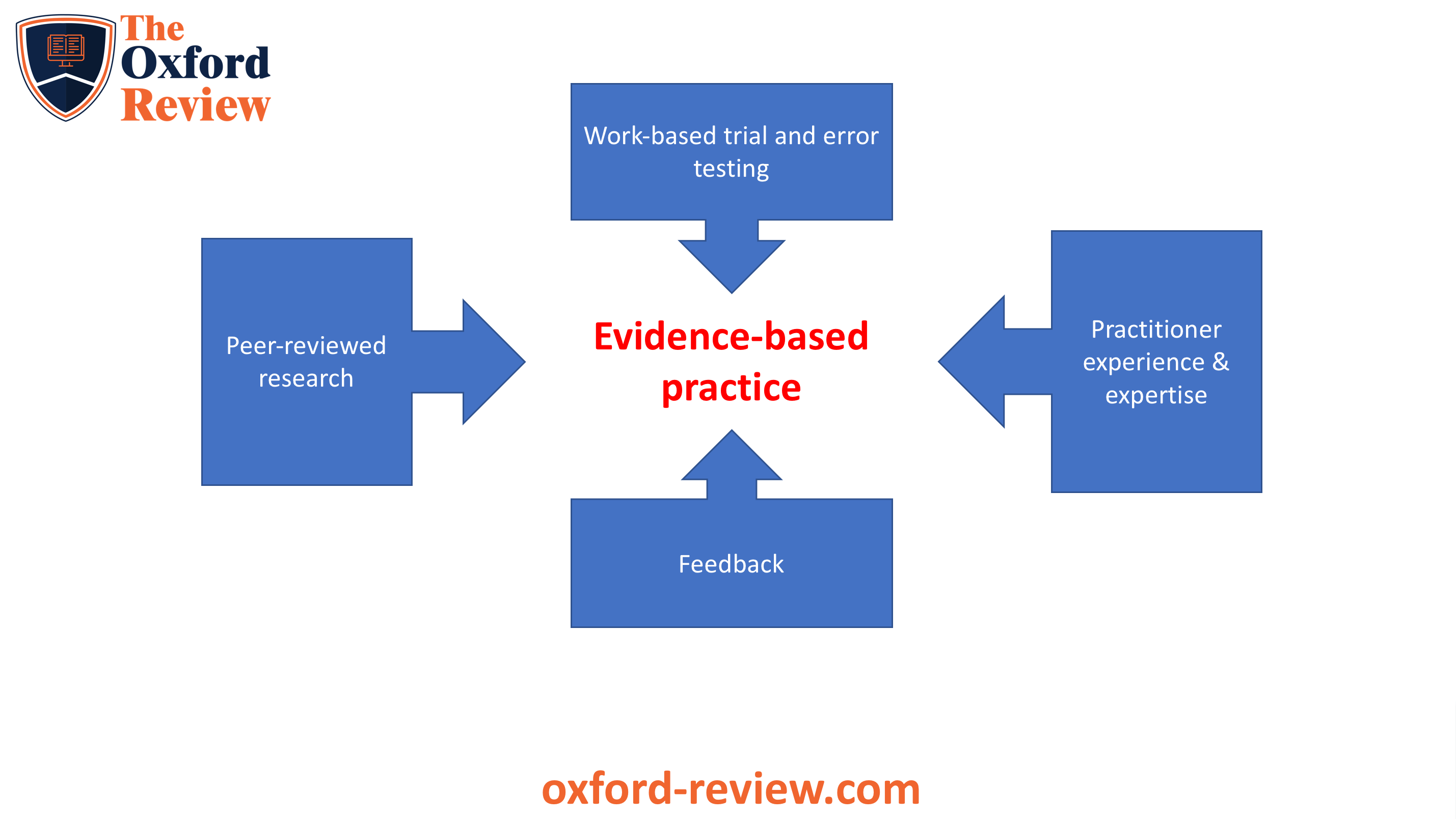

Evidence-based practice it is not solely focused on being dependent on peer-reviewed research. Experience plays a vital role in evidence-based practice, only it’s not any old experience that counts here. There is experience and experience. Understanding the role of experience in evidence based practice, and what types of experience that is matters.

- Introduction – experience matters

- The role of context

- The problem with experience

- Why making decisions with evidence is significantly different from evidence-based decision making

- Evidence-based decision making

- Experience of the purposes of research

- Experience of uncertainty

- Range of lay reactions to uncertainty

- Conclusion – experience is a central part of evidence-based practice

Introduction – experience matters

As evidence-based practice gains ground across industries and sectors, and matures in practice and understanding, inevitably, cul-de-sacs, false starts and myths develop that help us to build a better understanding about evidence-based practice and how to implement it – you know, the practice bit. As ideas, thinking and practices emerge they become stress tested as people engage with them in the face of organisational operational reality. In effect, as practice develops and discussions widen and narrow, original practices and thinking are challenged in their own version of peer-review testing.

Evidence-based practice is essentially a practice. It involves people taking action in their own contexts and realities, developing experience, questioning, nudging and problem solving. Research evidence, ideas, perspectives and practices are stress tested ‘in situ’ in a live, ever shifting laboratory of life. This is not about slavishly following academic research, as some (primarily academics) would have you believe.

The problem with research is that either it was conducted in a context or, in the case of meta-analysis and systematic reviews, out of context. There a number of different contexts that can change research findings or results and these include:

Era based contexts. Fashions of thinking, cognition and analysis occur. We don’t think like people did in the 50s or 1800s. Thinking changes and, as a result, so does research and its findings.

Cultural contexts can change research findings significantly. This applies to regional, national and micro (organisational) cultural levels.

Technological contexts frequently alter.

These contextual issues may or may not be factors in the research findings. The issue is that no research study can account for every contextual variable. Now this is not an argument that research is invalid due to contextual issues. It isn’t. Randomised controlled trials, meta-analysis and systematic reviews are all pretty much generalisable. However, the decision about whether a particular study or series of studies is applicable or how much it is applicable usually rests with someone with experience.

The problem with experience

There is a problem though. Usually, in organisations the people making decisions have little knowledge of the research evidence, have scant understanding of the limitations of research, and almost no expertise in evidence-based decision making. So whilst experience is normally a key component of the evidence based decision making process, few people in organisations have the right kind of experience. They may have experience of their job or of the organisation, but, unlike most clinical consultants in the health services, they tend to have hardly any experience with using research in an evidence based way.

Medical clinicians are brought up and trained to study the latest research on a continual basis. It’s part of their professional creed and culture. They can cite the most recent medical research and what the latest thinking is in the profession, not just what is happening in the organisation or industry. They know the research. They understand the research. They know its strengths and limitations. When they combine this with their professional experience, it becomes a powerful tool.

Few people in organisations have this level of expertise and experience with not just their job, but with using academic research and with practising evidence-based operations and decision-making. Whilst many in organisations would disagree with this and categorically state that they make evidence-based decisions, there is a distinction to be made here about evidence-based decision making and making decisions using evidence and it is a lot more than semantics and playing with words. There is a very real difference.

Why making decisions with evidence is significantly different from evidence-based decision making

If we take two examples here to make the comparison. The first is an experienced organisational leader and the second is an experienced medical consultant.

The organisational leader tends to use a mixture of experience, organisational and market data, opinions from colleagues and cultural norms. This is seen by most people as making decisions based on evidence.

A medical consultant, on the other hand, will use all of the above, experience, data, colleague opinion and cultural norms. However they will also use two other vital sources in their decision making:

- Academic or scientific research evidence

- A shared decision-making process with the patient or end-user.

Evidence-based decision making

The difference here is that the medical practitioner or airline pilot is up-to-date with the latest research evidence and has enough academic education and research experience of their own that they have the capability of not only understanding the research evidence, but also making decisions about the applicability of the research to the current situation. They understand at a deep level how to interpret the research and how and when to apply it and when not to, or merely to use it as ‘information’. Few organisational leaders have these skills or investment in the research, often viewing such ‘academic’ endeavours as “academic” with an intense scepticism and cynicism, as not being grounded in reality.

The criticism that there isn’t the same ‘scientific’ research evidence available to organisational leaders and managers that exists for medical practitioners has little basis. There is, without doubt, a wealth of very good academic research evidence for every aspect of leadership, management, organisational development, business development, and so on, that could be drawn upon. It is largely because many people in organisational contexts have little grounding in evidence-based practice and a lack of time that these potentially enormously useful resources aren’t used.

Medical, air transportation, legal and similar professionals have research and evidence-based practice running through their veins. They are continually and constantly involved and engaged with the research and not just the operational aspects of their business. They are continually reading the latest research and cases. Indeed, they use all sources of data to make decisions, but, first and foremost, they are continual students and researchers.

Experience of the purposes of research

There is another level of experience that evidence-based practitioners have that separates out making a decision based on the evidence and making evidence-based decisions. Evidence-based practitioners have a deep understanding of the issues of bias, both in research and in their own decision making. Evidence-based practitioners don’t assume they are right and they don’t assume they are being objective. In fact, they assume the opposite and go all out to disprove their own theories and conclusions. This is the scientific method.

People who use evidence to make their decisions frequently have little regard for issues of objectivity and bias, either their own or in the research. They tend to look for evidence that proves a point or a perspective, often using evidence to increase or play into a bias or to back up a decision.

It is this experience with the aims of research (to remove bias and increase objectivity) that highlights a different level of experience and expertise that is a fundamental and inseparable part of evidence-based practice.

Evidence-based practitioners are crucially concerned about bias, objectivity and understanding what forces or factors influence their decision making.

This then highlights the concern of evidence-based practitioners about their understanding of:

- Research methodology

- Their self-awareness

which is usually less important or less of a consideration, (if it even is considered), for people who use evidence for decision-making.

Experience of uncertainty

Another area which evidence-based practitioners have had to consider is the distinctions between levels of certainty and uncertainty and the impact of this on their decision-making, thinking, emotions, beliefs and attitudes.

Whilst everyone has to deal with uncertainty and ambiguity, the reactions of the scientist/practitioner to this is usually rather different to many non-evidence-based practitioners.

Range of lay reactions to uncertainty

There are primarily four clusters of reactions to uncertainty:

- From denial of the uncertainty or ambiguity – this is usually achieved by imposing an order that does not exist, on the situation. For example blaming the wrong cause and not engaging in

- Discomfort and avoidance of the uncertainty. This usually achieved by prematurely resolving the symptoms of the uncertainty or ambiguity

- An acceptance of the uncertainty but with no strategy to deal with it or benefit from it

- Complete comfort with uncertainty, diversity of thinking and ambiguity, and has the capability to work productively under situations of uncertainty.

A number of studies have found that these fourth type of people (known as generative individuals) are not only highly evidence-based but also highly concerned with objectivity, the removal of bias and an experimental or evidence generation approach. This requires the ability to be able to resolve paradoxes and incorporate disparate and contradictory perspectives and evidence.

It has been found that modes 1,2 and 3 tend to polarise their thinking and evidence gathering rather than looking for contradictions and learning from. Genitive individuals are ostensibly concerned with learning without trying to project or predict the learning based on some agenda or other.

Conclusion – experience is a central part of evidence-based practice

Evidence-based practice it is not solely focused on being dependent on peer-reviewed research. The point here is that experience is a core part of evidence-based practice. However, this goes far beyond familiarity and knowledge of industry-based exposure by an individual.

It also incorporates experience of objectivity, experimentation and critical thinking. Further, these ‘scientific’ approaches and modus operandi provide an individual with capability to deal with uncertainty productively and to be able to productively resolve and incorporate paradoxes and contradictory evidence.

References

2012 – Med Roundtable Gen Med Ed. ; 1(1): 75 – 84.

Wilkinson. D.J. (2006) The Ambiguity Advantage. Palgrave Macmillan. London

Be impressively well informed

Get the very latest research intelligence briefings, video research briefings, infographics and more sent direct to you as they are published

Be the most impressively well-informed and up-to-date person around...

2scimitar